Research

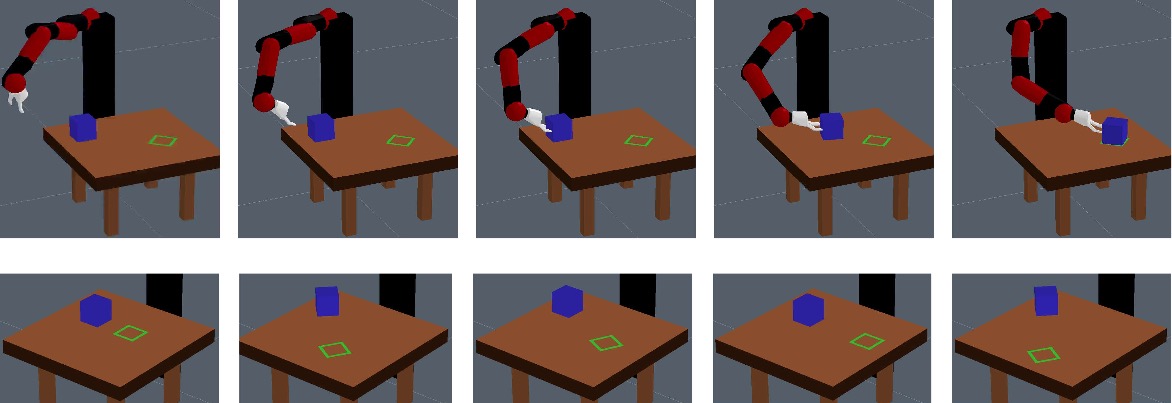

Sampling-based motion planning on manifold sequences

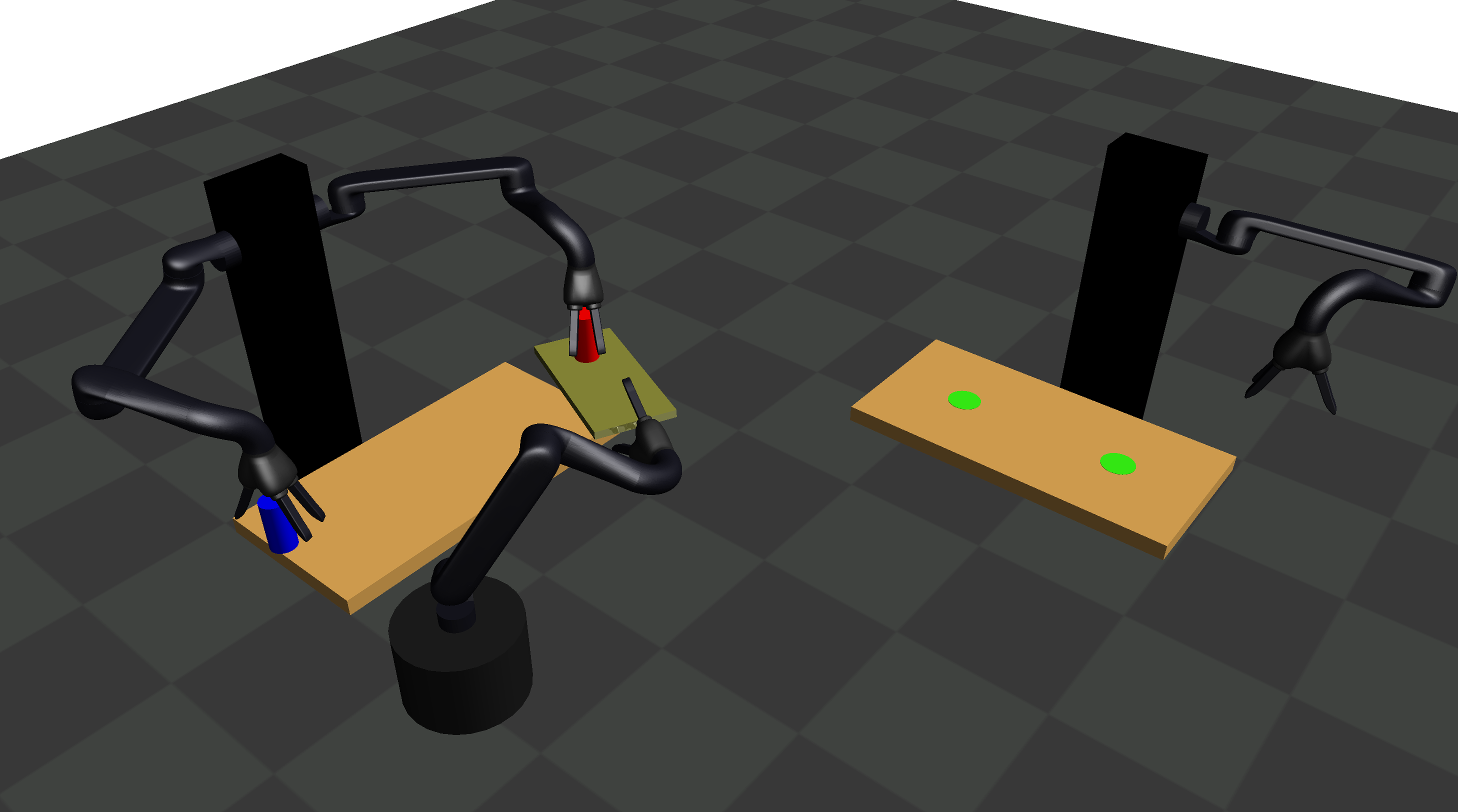

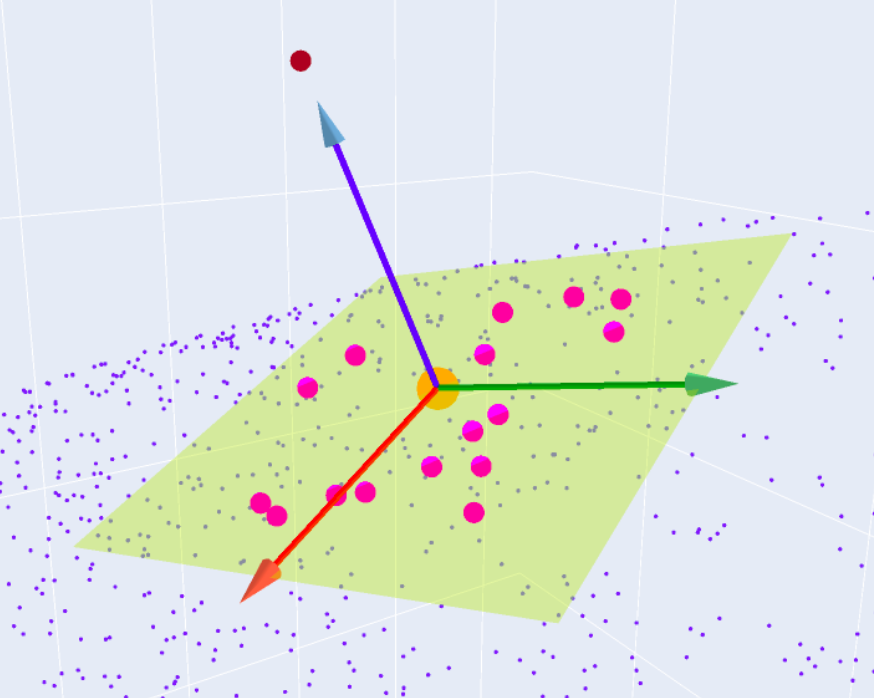

We address the problem of planning robot motions in constrained configuration spaces where the constraints change throughout the motion. The problem is formulated as a sequence of intersecting manifolds, which the robot needs to traverse in order to solve the task. We specify a class of sequential motion planning problems that fulfill a particular property of the change in the free configuration space when transitioning between manifolds. For this problem class, the algorithm Sequential Manifold Planning (SMP*) is developed that searches for optimal intersection points between manifolds by using RRT* in an inner loop with a novel steering strategy. We provide a theoretical analysis regarding SMP*s probabilistic completeness and asymptotic optimality. Further, we evaluate its planning performance on various multi-robot object transportation tasks.

Sampling-Based Motion Planning on Sequenced Manifolds

Peter Englert, Isabel M. Rayas Fernández, Ragesh Kumar

Ramachandran, and Gaurav S. Sukhatme

In Proceedings of Robotics: Science and Systems, 2021

[ bib |

code |

pdf |

video ]

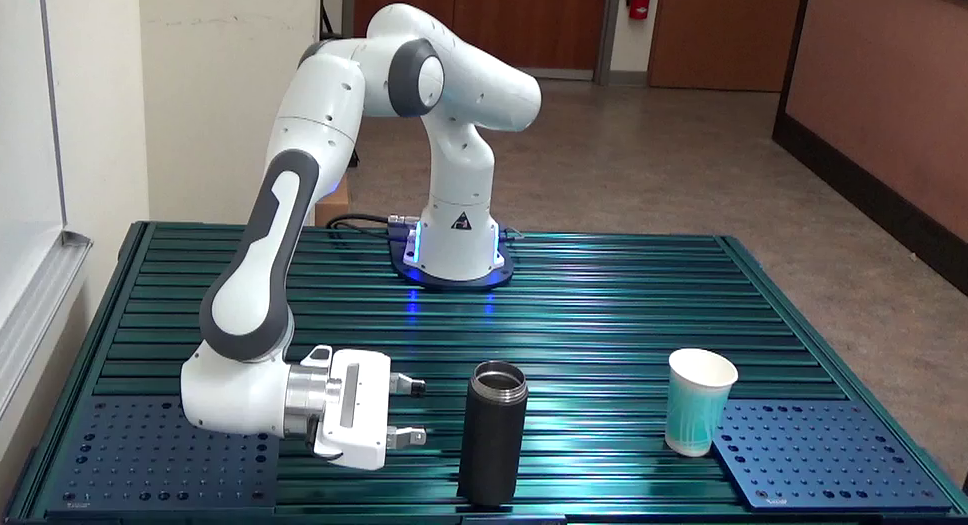

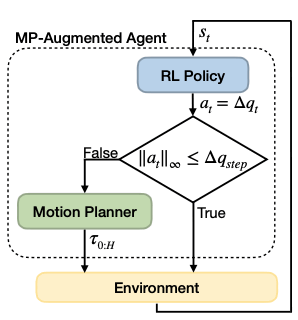

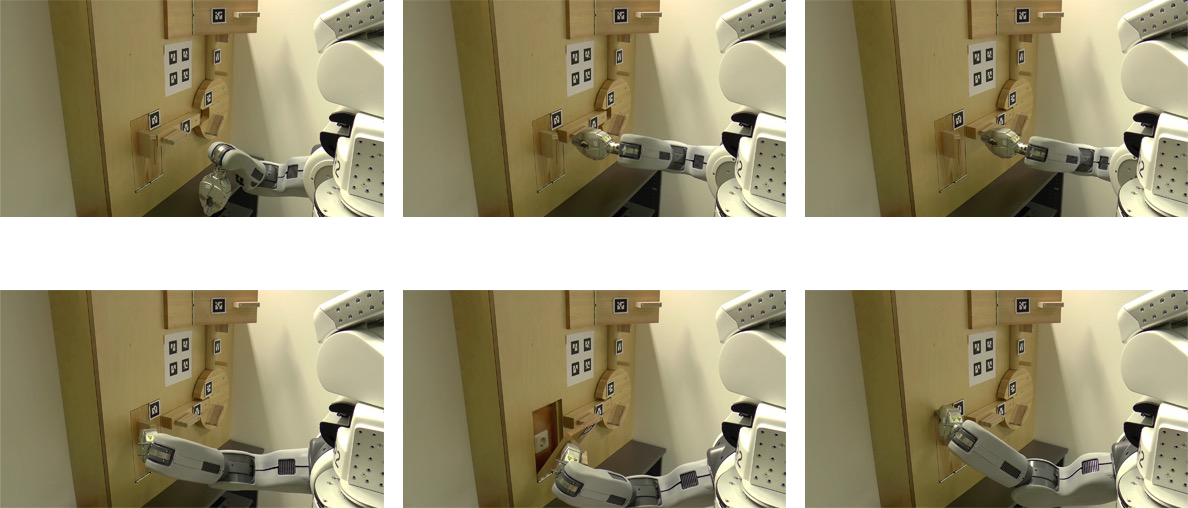

Motion planner augmented action spaces for reinforcement learning

Deep reinforcement learning (RL) agents are able to learn contact-rich manipulation tasks by maximizing a reward signal, but require large amounts of experience, especially in environments with many obstacles that complicate exploration. In contrast, motion planners use explicit models of the agent and environment to plan collision-free paths to faraway goals, but suffer from inaccurate models in tasks that require contacts with the environment. To combine the benefits of both approaches, we propose motion planner augmented RL (MoPA-RL) which augments the action space of an RL agent with the long-horizon planning capabilities of motion planners. Based on the magnitude of the action, our approach smoothly transitions between directly executing the action and invoking a motion planner. We demonstrate that MoPA-RL increases learning efficiency, leads to a faster exploration of the environment, and results in safer policies that avoid collisions with the environment.

Motion Planner Augmented Reinforcement Learning for Obstructed

Environments

Jun Yamada, Youngwoon Lee, Gautam Salhotra, Karl Pertsch, Max

Pflueger, Gaurav S. Sukhatme, Joseph J. Lim, and Peter Englert

In Conference on Robot Learning, 2020

[ bib |

website |

code |

pdf ]

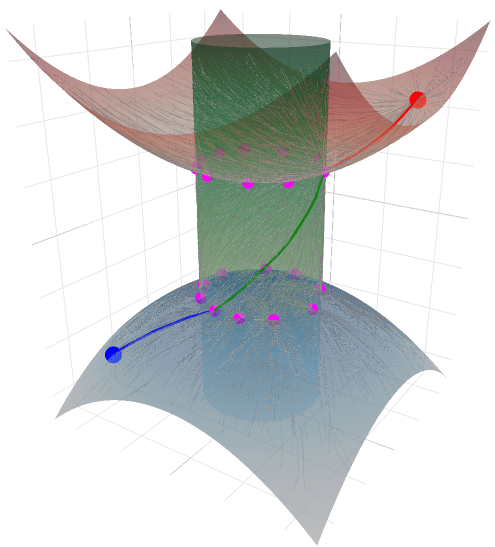

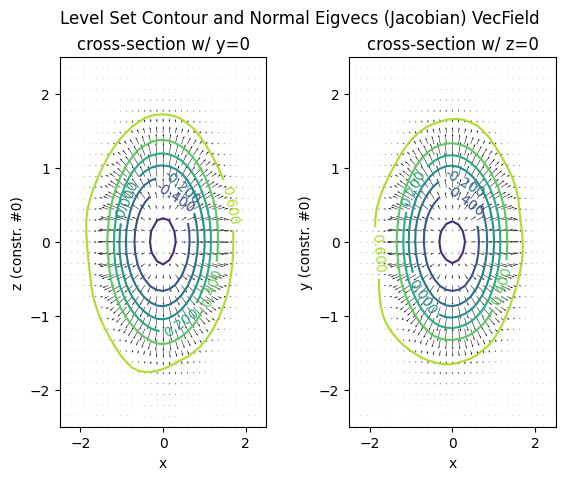

Learning manifolds for sequential motion planning

Constrained robot motion planning is a widely used technique to solve complex robot tasks. We consider the problem of learning representations of constraints from demonstrations with a deep neural network, which we call Equality Constraint Manifold Neural Network (ECoMaNN). The key idea is to learn a level-set function of the constraint suitable for integration into a constrained sampling-based motion planner. Learning proceeds by aligning subspaces in the network with subspaces of the data. We combine both learned constraints and analytically described constraints into the planner and use a projection-based strategy to find valid points. We evaluate ECoMaNN on its representation capabilities of constraint manifolds, the impact of its individual loss terms, and the motions produced when incorporated into a planner.

Learning Equality Constraints for Motion Planning on Manifolds

Giovanni Sutanto, Isabel M. Rayas Fernández, Peter Englert,

Ragesh K. Ramachandran, and Gaurav S. Sukhatme

In Conference on Robot Learning, 2020

[ bib |

code |

pdf |

video ]

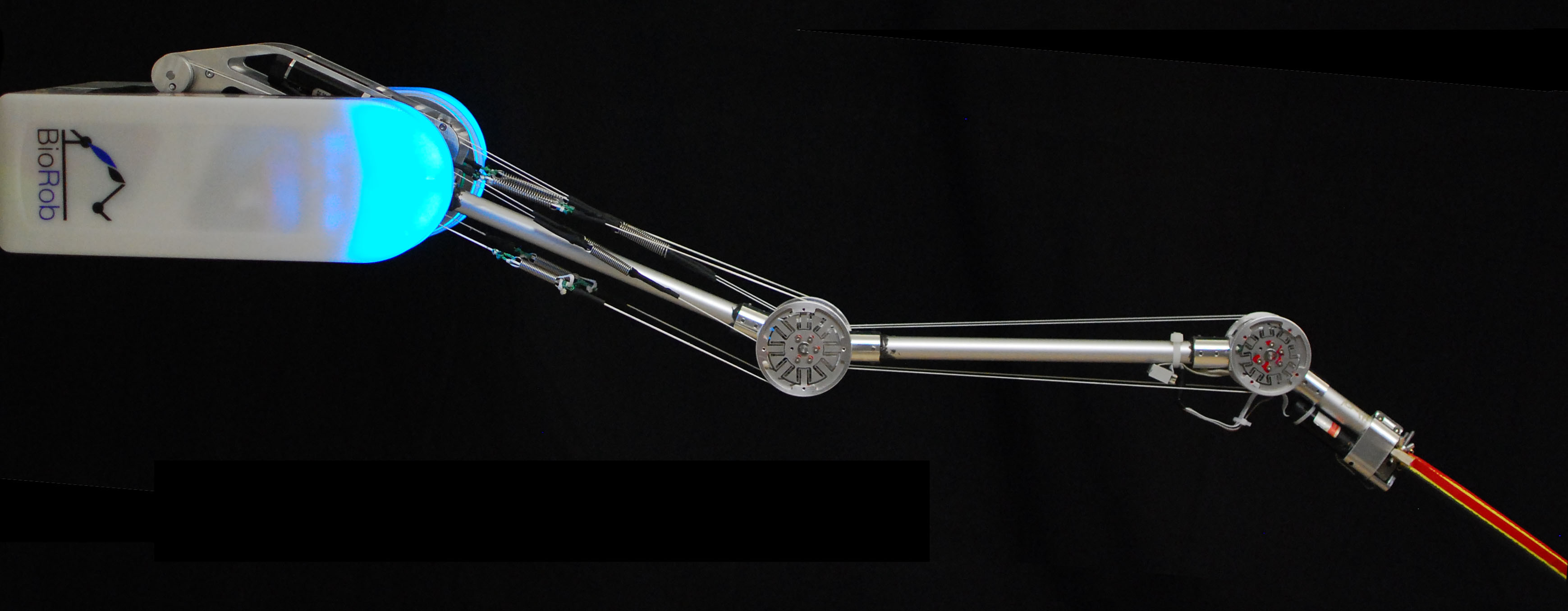

Combining optimization and reinforcement learning

As an alternative to the standard reinforcement learning formulation where all objectives are defined in a single reward function, we propose to decompose the problem into analytically known objectives, such as motion smoothness, and black-box objectives, such as trial success or reward depending on the interaction with the environment. The skill learning problem is separated into an optimal control part that improves the skill with respect to the known parts of a problem and a reinforcement learning part that learns the unknown parts by interacting with the environment.

Learning Manipulation Skills from a Single Demonstration

Peter Englert

and Marc Toussaint

International Journal of Robotics Research

37(1):137-154, 2018

[ bib |

pdf |

video ]

Extracting compact task representations from depth data

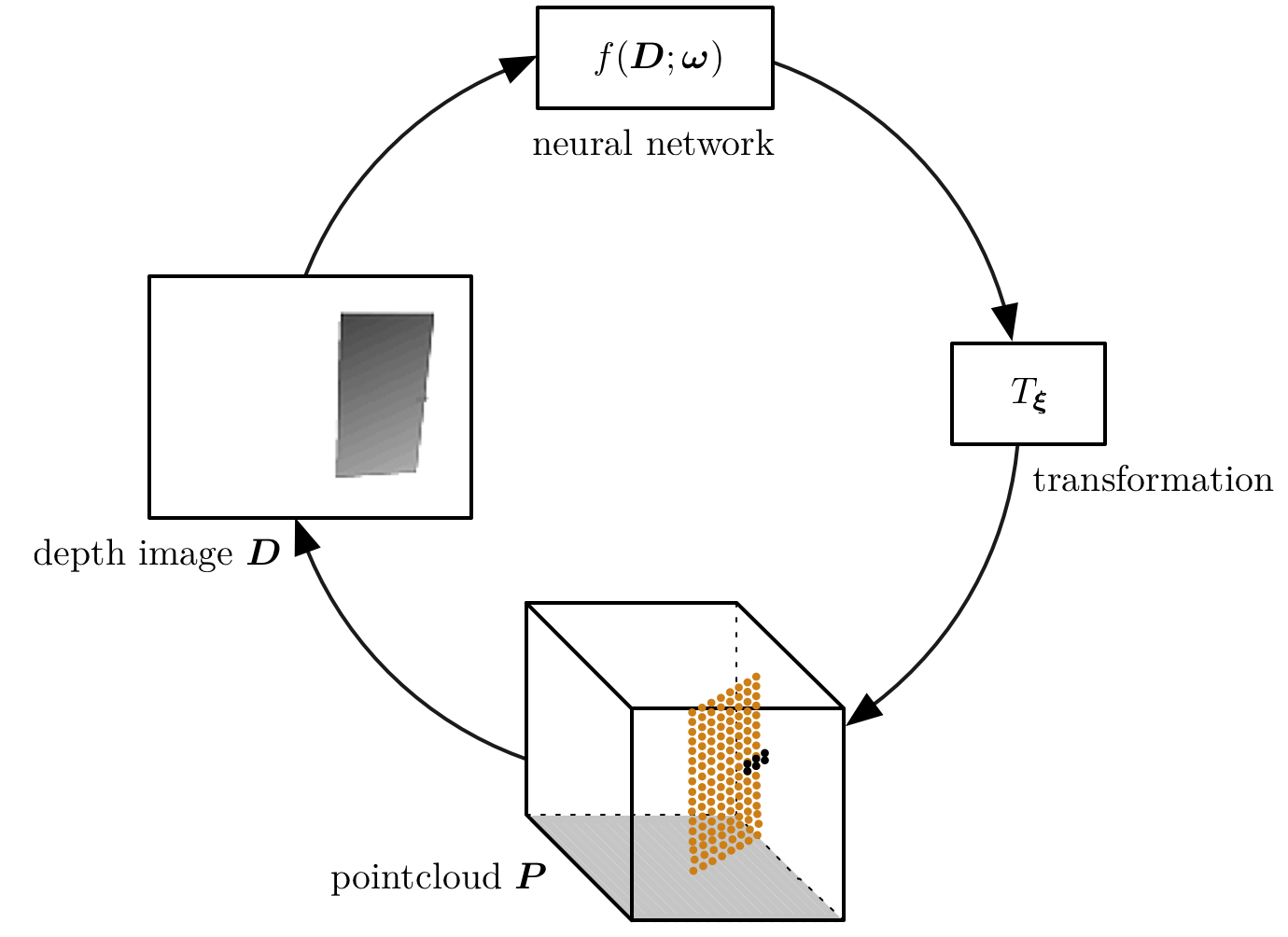

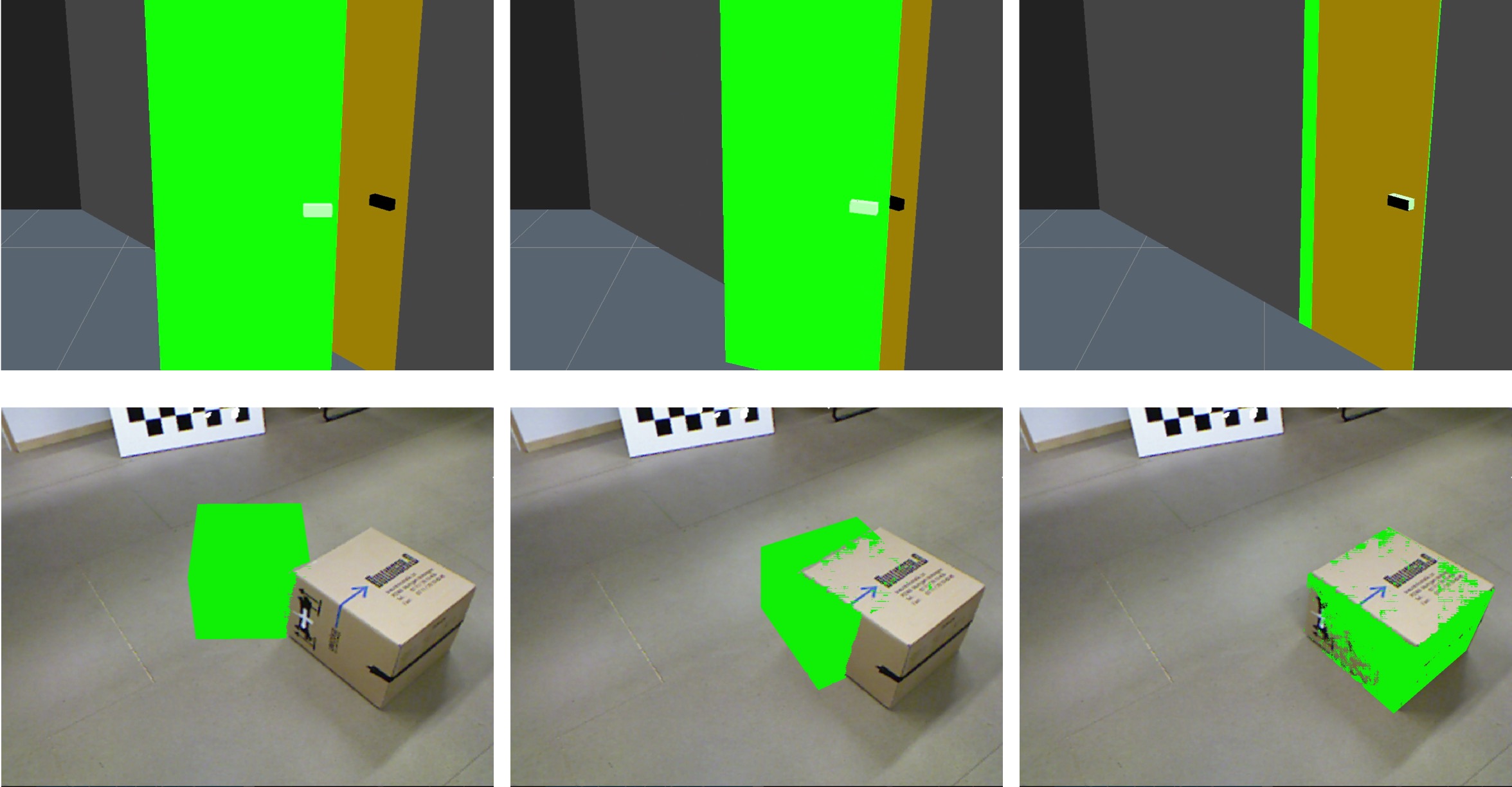

Kinematic morphing networks find the relation of different geometric environments and use this relation to transfer skills between the environments. We assume that the environment can be modeled as a kinematic structure and represented with a low-dimensional parametrization. A key element of this work is the usage of the concatenation property of affine transformations and the ability to convert point clouds to depth images, which allows to apply the network in an iterative manner.

Kinematic Morphing Networks for Manipulation Skill Transfer

Peter Englert and Marc Toussaint

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2018

[ bib |

pdf |

video ]

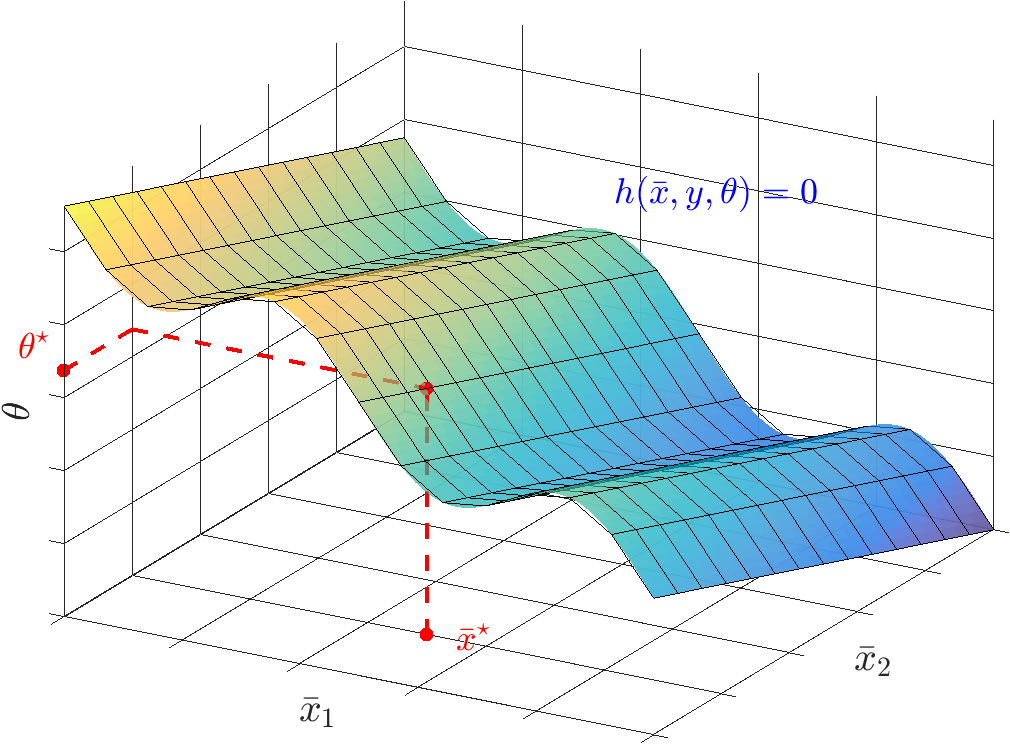

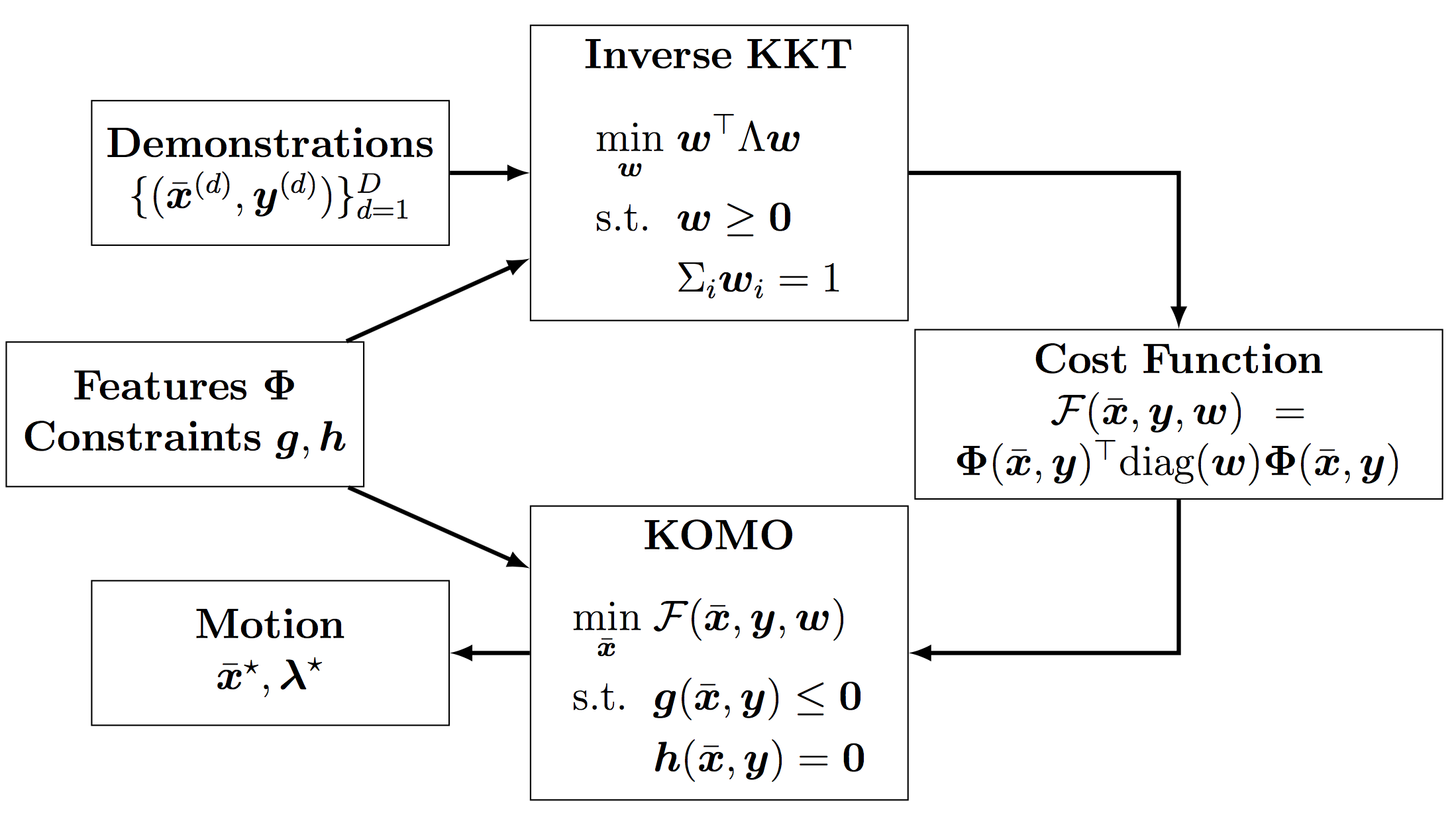

Learning generalizable skills from demonstrations

The algorithm extracts the essential features of a demonstrated task into a cost function that is generalizable to various environment instances. For this purpose, it assumes that the demonstrations are optimal with respect to an underlying constrained optimization problem. The aim of this approach is to push learning from demonstration to more complex manipulation scenarios that include the interaction with objects and therefore the realization of contacts/constraints within the motion.

Inverse KKT - Learning Cost Functions of Manipulation Tasks from

Demonstrations

Peter Englert, Ngo Anh Vien, and Marc Toussaint

International Journal of Robotics Research 36(13-14):1474-1488, 2017

[ bib |

pdf ]

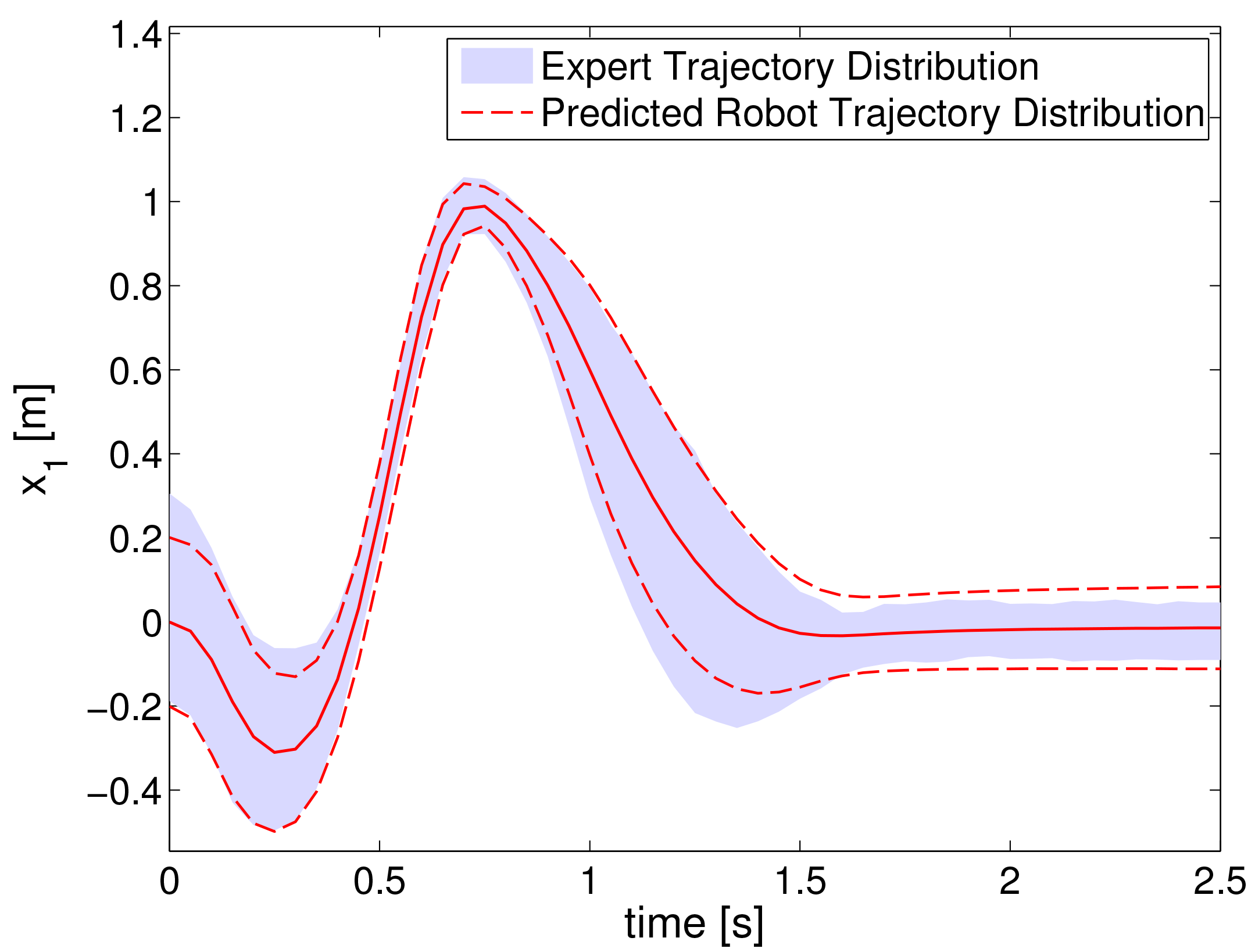

Learning with probabilistic models

Probabilistic models like Gaussian processes are the right choice if an uncertainty estimate of a model is important for the task. In [1], we proposed a probabilistic imitation learning formulation that learns a robot dynamics model from data. This model is used to perform a probabilistic trajectory matching to imitate the distribution of expert demonstrations. In [2], the robot only uses its tactile sensors to explore the shape of an unknown object by sliding on it. Gaussian processes are used to represent the implicit surface of the unknown object shape. The uncertainty of the model is used to guide the exploration into regions with the highest uncertainty.

Probabilistic Model-based Imitation Learning

Peter Englert, Alexandros

Paraschos, Marc Peter Deisenroth, and Jan Peters

Adaptive Behavior

Journal 21(5):388-403, 2013

[ bib |

pdf ]

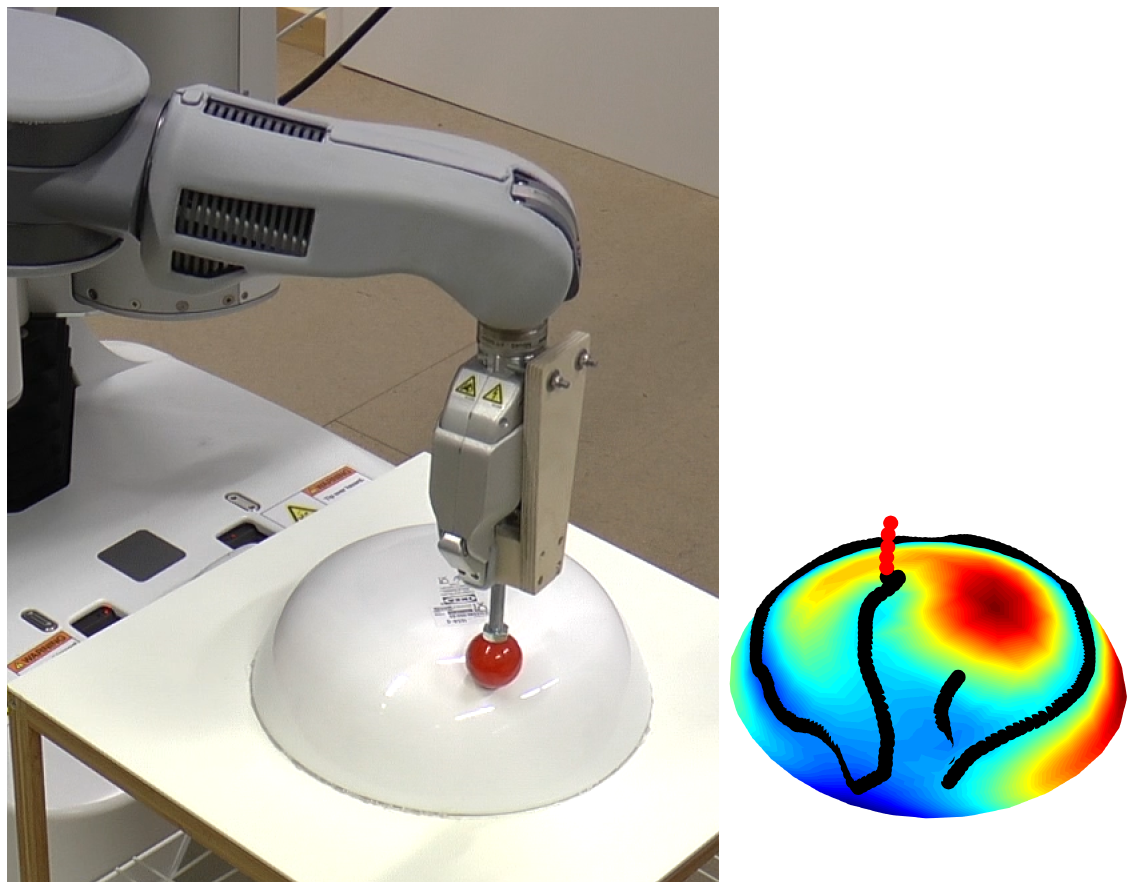

Active Learning with Query Paths for Tactile Object Shape Exploration

Danny Driess, Peter Englert, and Marc Toussaint

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2017

[ bib |

pdf |

video ]

Publications

Sampling-Based Motion Planning on Sequenced Manifolds

Peter Englert, Isabel M. Rayas Fernández, Ragesh Kumar

Ramachandran, and Gaurav S. Sukhatme

In Proceedings of Robotics: Science and Systems, 2021

[ bib |

code |

pdf |

video ]

Motion Planner Augmented Reinforcement Learning for Obstructed

Environments

Jun Yamada, Youngwoon Lee, Gautam Salhotra, Karl Pertsch, Max

Pflueger, Gaurav S. Sukhatme, Joseph J. Lim, and Peter Englert

In Conference on Robot Learning, 2020

[ bib |

website |

code |

pdf ]

Learning Equality Constraints for Motion Planning on Manifolds

Giovanni Sutanto, Isabel M. Rayas Fernández, Peter Englert,

Ragesh K. Ramachandran, and Gaurav S. Sukhatme

In Conference on Robot Learning, 2020

[ bib |

code |

pdf |

video ]

Learning Manifolds for Sequential Motion Planning

Isabel M. Rayas

Fernández, Giovanni Sutanto, Peter Englert, Ragesh K. Ramachandran, and

Gaurav S. Sukhatme

RSS Workshop on Learning (in) Task and Motion

Planning, 2020

[ bib |

pdf ]

Kinematic Morphing Networks for Manipulation Skill Transfer

Peter Englert and Marc Toussaint

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2018

[ bib |

pdf |

video ]

Learning Manipulation Skills from a Single Demonstration

Peter Englert

and Marc Toussaint

International Journal of Robotics Research

37(1):137--154, 2018

[ bib |

pdf |

video ]

Inverse KKT --- Learning Cost Functions of Manipulation Tasks from

Demonstrations

Peter Englert, Ngo Anh Vien, and Marc Toussaint

International Journal of Robotics Research 36(13-14):1474--1488, 2017

[ bib |

pdf ]

Active Learning with Query Paths for Tactile Object Shape Exploration

Danny Driess, Peter Englert, and Marc Toussaint

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2017

[ bib |

pdf |

video ]

Constrained Bayesian Optimization of Combined Interaction Force/Task Space

Controllers for Manipulations

Danny Driess, Peter Englert, and Marc Toussaint

In Proceedings of the IEEE International Conference on Robotics

and Automation, 2017

[ bib |

pdf |

video ]

Identification of Unmodeled Objects from Symbolic Descriptions

Andrea

Baisero, Stefan Otte, Peter Englert, and Marc Toussaint

arXiv:1701.06450, 2017

[ bib |

pdf ]

Policy Search in Reproducing Kernel Hilbert Space

Vien Ngo Anh, Peter Englert, and Marc Toussaint

In Proceedings of the International Joint Conference on

Artificial Intelligence, 2016

[ bib |

pdf ]

Combined Optimization and Reinforcement Learning for Manipulations

Skills

Peter Englert and Marc Toussaint

In Proceedings of Robotics: Science and Systems, 2016

[ bib |

pdf |

video ]

Sparse Gaussian Process Regression for Compliant, Real-Time Robot

Control

Jens Schreiter, Peter Englert, Duy Nguyen-Tuong, and Marc Toussaint

In Proceedings of the IEEE International Conference on Robotics

and Automation, 2015

[ bib |

pdf ]

Inverse KKT -- Learning Cost Functions of Manipulation Tasks from

Demonstrations

Peter Englert and Marc Toussaint

In Proceedings of the International Symposium of Robotics

Research, 2015

[ bib |

pdf |

video ]

Dual Execution of Optimized Contact Interaction Trajectories

Marc Toussaint, Nathan Ratliff, Jeannette Bohg, Ludovic Righetti,

Peter Englert, and Stefan Schaal

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2014

[ bib |

pdf ]

Inverse KKT Motion Optimization: A Newton Method to Efficiently Extract

Task Spaces and Cost Parameters from Demonstrations

Peter Englert and

Marc Toussaint

NIPS Workshop on Autonomously Learning Robots, 2014

[ bib |

pdf ]

Reactive Phase and Task Space Adaptation for Robust Motion Execution

Peter Englert and Marc Toussaint

In Proceedings of the IEEE International Conference on

Intelligent Robotics Systems, 2014

[ bib |

pdf ]

Multi-Task Policy Search for Robotics

Marc Peter Deisenroth, Peter Englert, Jan Peters, and Dieter Fox

In Proceedings of the IEEE International Conference on Robotics

and Automation, 2014

[ bib |

pdf ]

Model-based Imitation Learning by Probabilistic Trajectory Matching

Peter Englert, Alexandros Paraschos, Jan Peters, and Marc Peter

Deisenroth

In Proceedings of the IEEE International Conference on Robotics

and Automation, 2013

[ bib |

pdf ]

Probabilistic Model-based Imitation Learning

Peter Englert, Alexandros

Paraschos, Marc Peter Deisenroth, and Jan Peters

Adaptive Behavior

Journal 21(5):388--403, 2013

[ bib |

pdf ]

Contact

englertpr AT gmail.com